01 Apr Natural Language Processing (NLP)

Table of Contents

Introduction

Natural language processing seeks to build machines that understand and respond to text or voice data – and respond with their text or speech – in much the same way humans do. The NLP draws on many disciplines, including computer science and computational linguistics, to bridge the gap between human communication and computer understanding.

What is Natural Language Processing?

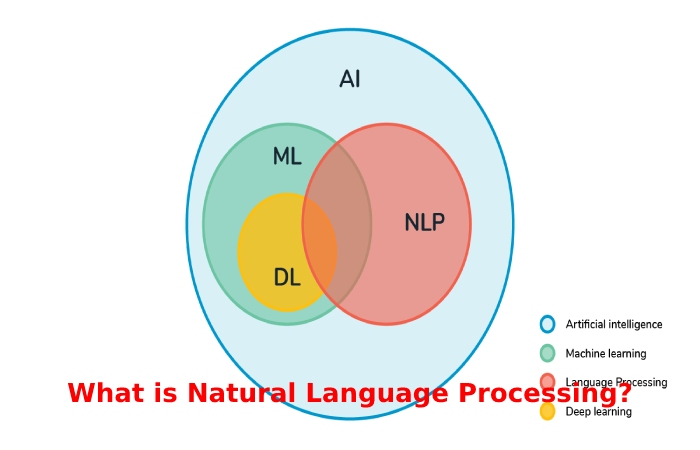

Natural language processing (NLP) refers to the computer branch —the artificial intelligence branch or AI — which aims to give computers the skill to understand spoken text and words in the same way as people.

The NLP combines computational linguistics — rule-based modeling of human language — with numerical, machine learning, and deep learning models. So organized, these technologies enable computers to process human language as text or voice data and to “understand” its whole meaning with the intention and purpose of the writer.

NLP drives computer programs that translate text from one language to another, respond to voice commands, and quickly summarize large volumes of text – even in real-time. There is an excellent opportunity that you have interacted with NLP in the form of voice-controlled GPS systems, digital assortment software, text-to-speech detection software, customer service conversations, and other consumer comforts. But the LNP also plays an increasing role in corporate solutions that help reorganize business operations, increase employee productivity, and simplify business processes and missions.

Why is Natural Language Processing (NLP) important?

Large volumes of textual data

Natural language meting out helps computers communicate with humans in their language and scales other language-related tasks. For example, NLP allows computers to read text, hear lyrics, interpret them, measure feelings, and determine which parts are essential.

Today’s machines can study more language-based data than humans without fatigue and are consistently unbiased. With so much unstructured data being generated daily, from health records to social media, automation will be essential to analyzing text and voice data effectively.

Structuring a Highly Unstructured Data Source

Human language is incredibly complex and diverse. We express ourselves in immeasurable ways, both orally and in the script. Not only are there many languages and tongues, but in each language, there is a unique set of rubrics of grammar and syntax, of terms and slang. For example, when we write, we often write misspelled or shortened words or omit punctuation. When we speak, we have local accents, mumble, stutter, and borrow terms from other languages.

While supervised and unsupervised learning, intense learning, is now widely used to model human language. There is also a need for syntactic and semantic understanding and expertise in areas that are not necessarily present in these machine-learning approaches. NLP is important because it resolves ambiguity in language and adds a functional numerical structure to data for many downstream requests, such as speech recognition or text analysis.

Also Read: What are Internal Combustion Engines

How does Natural Language Processing (NLP) work?

Natural Language Processing (NLP) includes various techniques for interpreting human language, ranging from statistical and machine-learning methods to rule-based approaches and algorithms. As a result, we need a wide variety of courses because text- and voice-based data vary widely, as do practical applications.

The primary tasks of Natural Language Processing (NLP) NLP include tokenization and analysis, lemmatization/derivation, and labeling part of the voice. Language detection and identification of semantic relationships. If you’ve ever drawn a prayer diagram in elementary school, you’ve done these tasks manually.

Generally speaking, Natural Language Processing (NLP) tasks break down language into shorter elementary pieces, try to understand the relationships between the parts, and explore how the details work together to create meaning.

These underlying tasks remain often used in top-level NLP capabilities, such as:

- Categorization of content. A language-based document summary, including search and indexing, content alerts, and duplication detection.

- Discovery and modeling of themes. Accurately captures meaning and topics in text collections and applies advanced analytics to text, such as optimization and forecasting.

- Contextual extraction. Automatically extract structured information from text-based sources.

- I am feeling analysis. Classify mood or subjective opinions within large amounts of text, including average sentiment and opinion mining.

- Speech-to-text and text-to-speech conversion. Transform voice commands into typed text and vice versa.

- Document Summary. Automatic generation of synopsis of large text bodies.

- Machine translation. Automatic conversion of text or speech from one language to another.

- In all these cases, the overall goal is to take input from natural language and use linguistics and algorithms to transform or enrich the text in a way that offers incredible value.

Conclusion

Natural language processing is significant because it helps resolve language ambiguity and adds a functional numeric structure to the data for many later applications, such as speech recognition or text analysis.

No Comments